Artificial Intelligence

If you would like to view my complete work of Artificial Intelligence: Click Here.

Probability is the basis of artificial intelligence because when it comes to searching and decision making we want to find the solution with the least amount of overhead. In order to search for the optimal solution we first figure out the brute force way of solving the problem then learn how we can improve the solution. Depth First Search (DFS) is a brute search method that takes exponential time to finish in the worst case scenario. Modifying DFS to stop at a maximum depth is Iterative Deepening DFS and slightly reduces the exponential time and memory. By using the A* search we can search even faster. A* search uses an admissible heuristic function that only explores nodes on a minimum cost path.

If you would like to see my work on: Probability, Depth First Search, Iterative Deepening Depth First Search, and A* Search. Click Here

Minimax is a general state-space search function used to find the utility of each state assuming they are playing against a rational (optimal) adversary. The problem with minimax is a slow algorithm of O(b^m) where b is the branching factor and m is the depth of the tree but we can improve this algorithm by using Alpha-Beta Pruning. Alpha-Beta Pruning uses the minimax algorithm but the remaining branches are pruned when the optimal solution is known to not be down that path(it is already bad enough that it won’t be played). The Alpha-Beta Pruning algorithm's best case time complexity is O(b^(m/2)) where b is the branching factor and m is the depth of the tree improving the minimax function. By implementing depth-limited Alpha-Beta Pruning we lose the ability to guarantee an optimal solution but we use an heuristic function evaluation instead of utility function. The heuristic should be correlated with the actual chances of winning to ensure we are getting closer to our solution.

If you would like to see my work on: Minimax, Depth-Limited Alpha-Beta Pruning, and demonstrating Depth-Limited Alpha-Beta Pruning is much faster than minimax. Click here

Constraint Satisfaction Problems(CSP) can be faster than general state-space searchers, like Minimax. The goal is to search for a complete and consistent assignment where consistent means an assignment that does not violate any constraint and complete means all variables are assigned. There are 3 Constraint Satisfaction Problems that I have solved. The first is a linear graph that has nodes of 5 colors and each color has constraints and the goal is to find a complete and consistent assignment of the colors. The second is the n-rooks problem which is to arrange n - rooks on an (n x n) board in such a way that none of the rooks could attack another by making any of its possible horizontal or vertical moves and my goal was to prove how many possible solutions there are to this CSP. The final problem was to solve the n-queens problem which is similar to the n-rooks problems but also includes diagonals like the queen in chess and my goal was to show how many possible solutions there are for an 8x8 and 11x11 board to this CSP.

If you would like to see my work on: Constraint Satisfaction Problems. Click Here

Bayesian Networks are probability graphic models that represent knowledge about uncertain domains where random variables represent the conditional probability for a corresponding random variable. Logistic Regression is a natural probabilistic view of class predictions that is: easily extended to multiple classes, quick to train, fast at classification, good accuracy for many datasets, and resistance to overfitting. However when using logistic regression, a linear decision boundary is too simple for complex problems.

If you would like to see my work on: Bayesian Networks, and Logistic Regression. Click Here

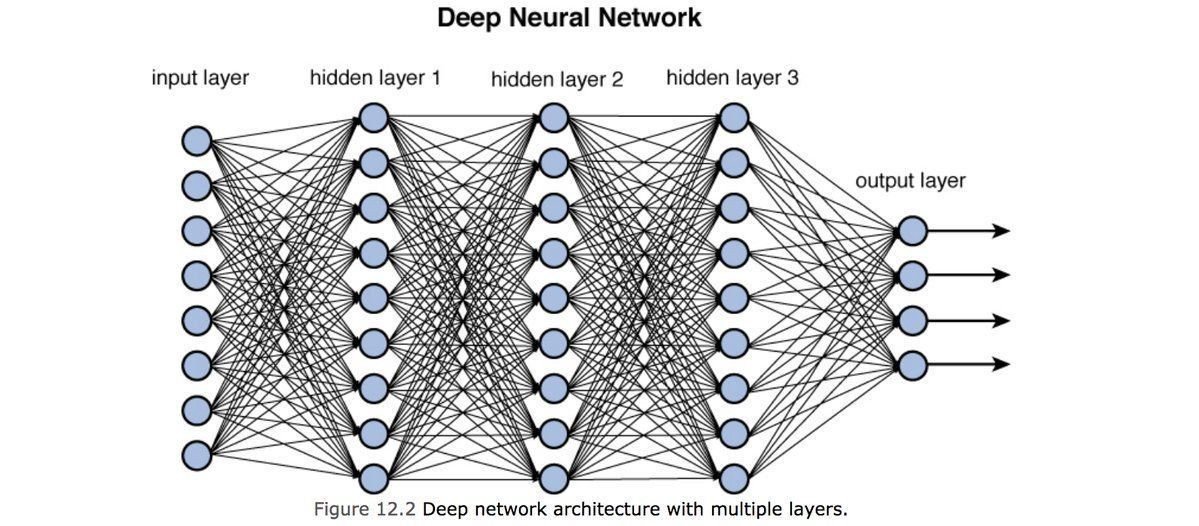

Weight learning algorithms work by making thousands of tiny adjustments, each making the network do better at the most recent pattern, but perhaps a little worse on many others. Eventually, this tends to be good enough to learn effective classifiers for many real applications. There are 3 steps for Deep Learning: define a set of functions, goodness of function, and pick the best function. When you define a set of functions, this is an activation function and this function will be used to allow neurons in a neural network to communicate with each other through their synapses. Then, goodness of function is referring to the total loss function because we want to find the network parameters that minimize total loss. When picking the best function we have to find the network parameters that minimize total loss.

If you would like to see my work on: Deep Learning, and Neural Networks Click Here